SpaceNet 6

Multimodal Data Analysis for Travel Routing and Time Estimation

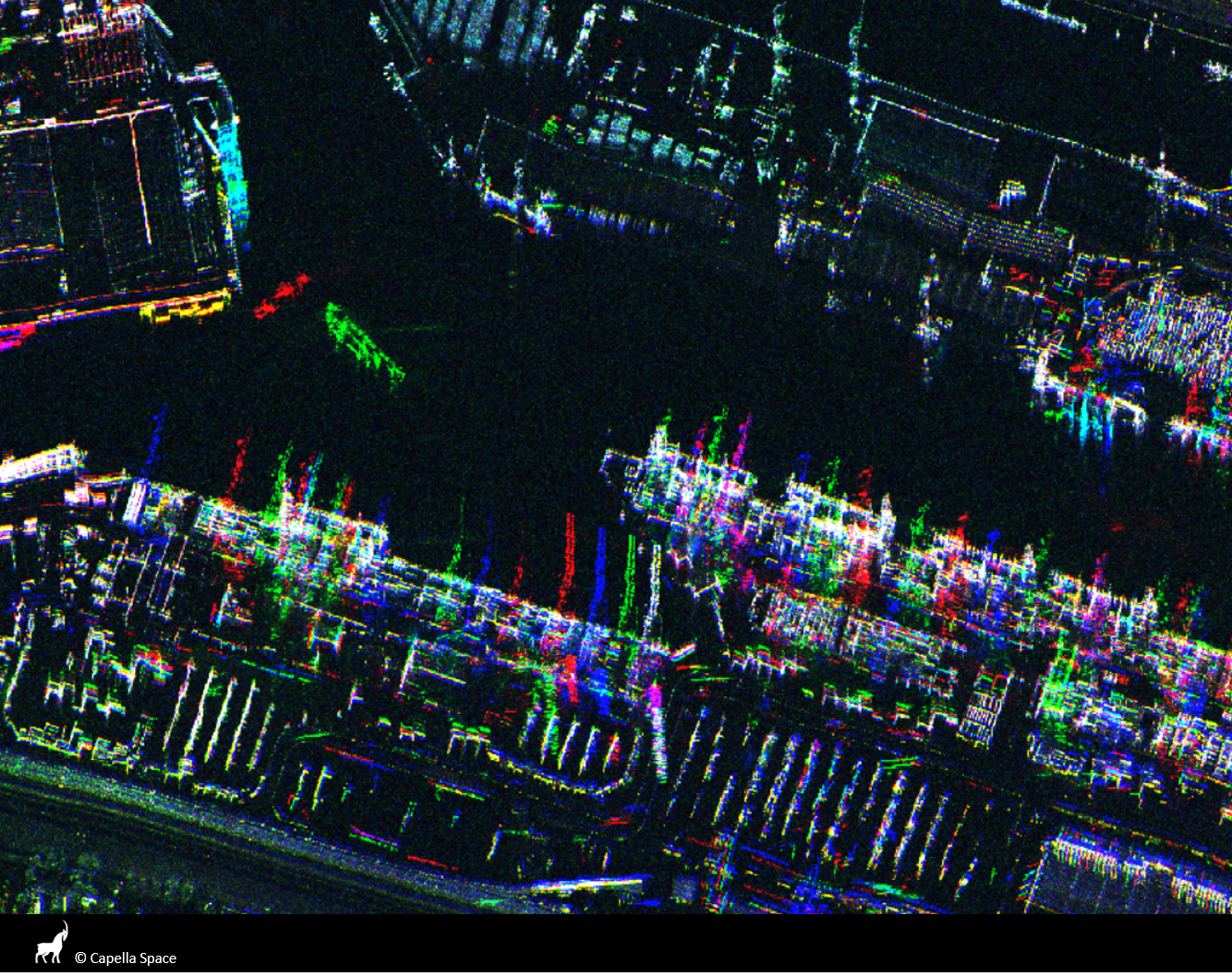

Synthetic Aperture Radar (SAR) is a unique form of radar that can penetrate clouds, collect during all- weather conditions, and capture data day and night. Overhead collects from SAR satellites could be particularly valuable in the quest to aid disaster response in instances where weather and cloud cover can obstruct traditional electro-optical sensors. However, despite these advantages, there is limited open data available to researchers to explore the effectiveness of SAR for such applications, particularly at ultra-high resolutions.

The task of SpaceNet 6 is to automatically extract building footprints with computer vision and artificial intelligence (AI) algorithms using a combination of SAR and electro-optical imagery datasets. This openly-licensed dataset features a unique combination of half-meter Synthetic Aperture Radar (SAR) imagery from Capella Space and half-meter electro-optical (EO) imagery from Maxar’s WorldView 2 satellite. The area of interest for this challenge will be centered over the largest port in Europe: Rotterdam, the Netherlands. This area features thousands of buildings, vehicles, and boats of various sizes, to make an effective test bed for SAR and the fusion of these two types of data.

In this challenge, the training dataset contains both SAR and EO imagery, however, the testing and scoring datasets contain only SAR data. Consequently, the EO data can be used for pre-processing the SAR data in some fashion, such as colorization, domain adaptation, or image translation, but cannot be used to directly map buildings. The dataset is structured to mimic real-world scenarios where historical EO data may be available, but concurrent EO collection with SAR is often not possible due to inconsistent orbits of the sensors, or cloud cover that will render the EO data unusable.