SpaceNet 7

Multi-Temporal Urban Development Challenge

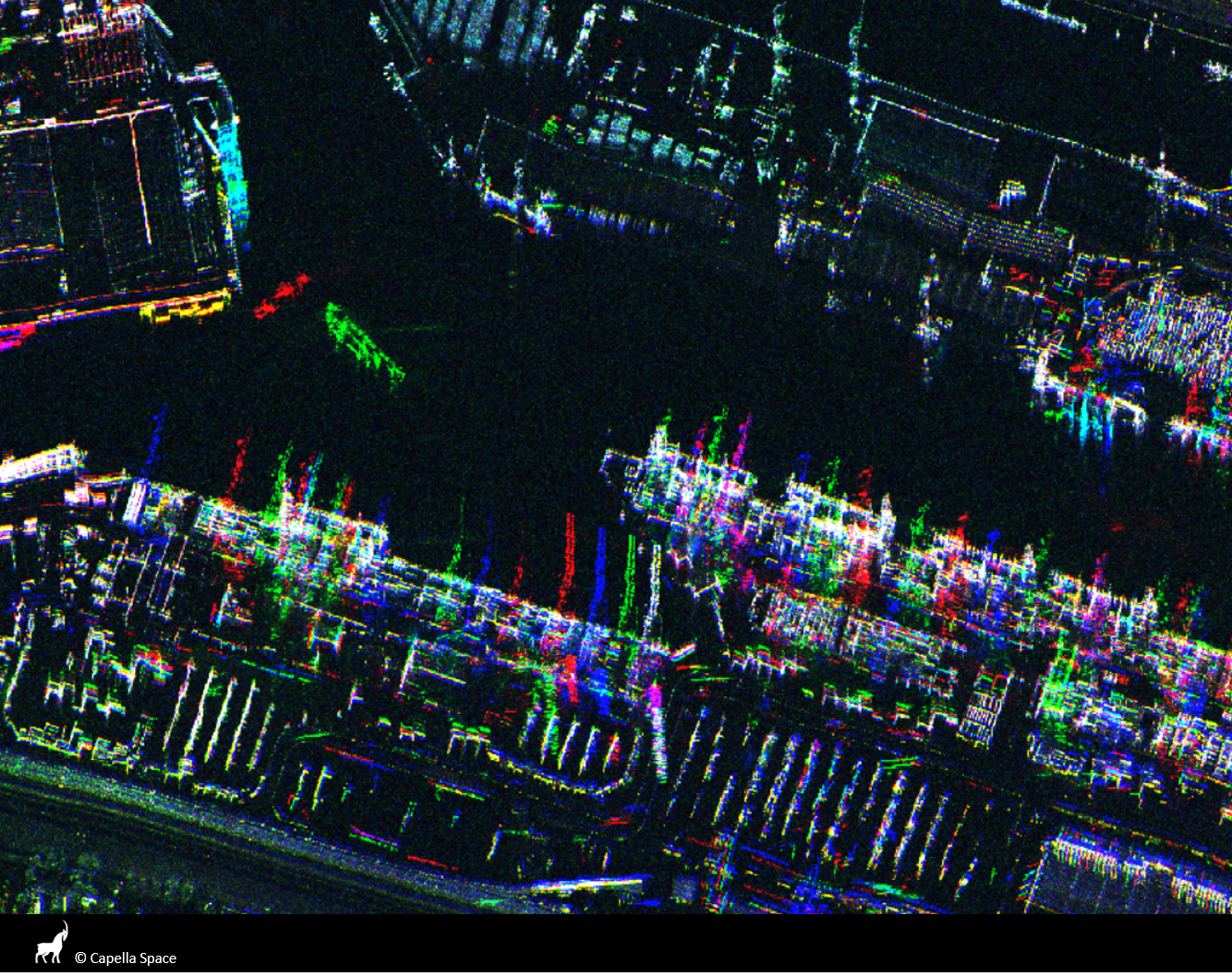

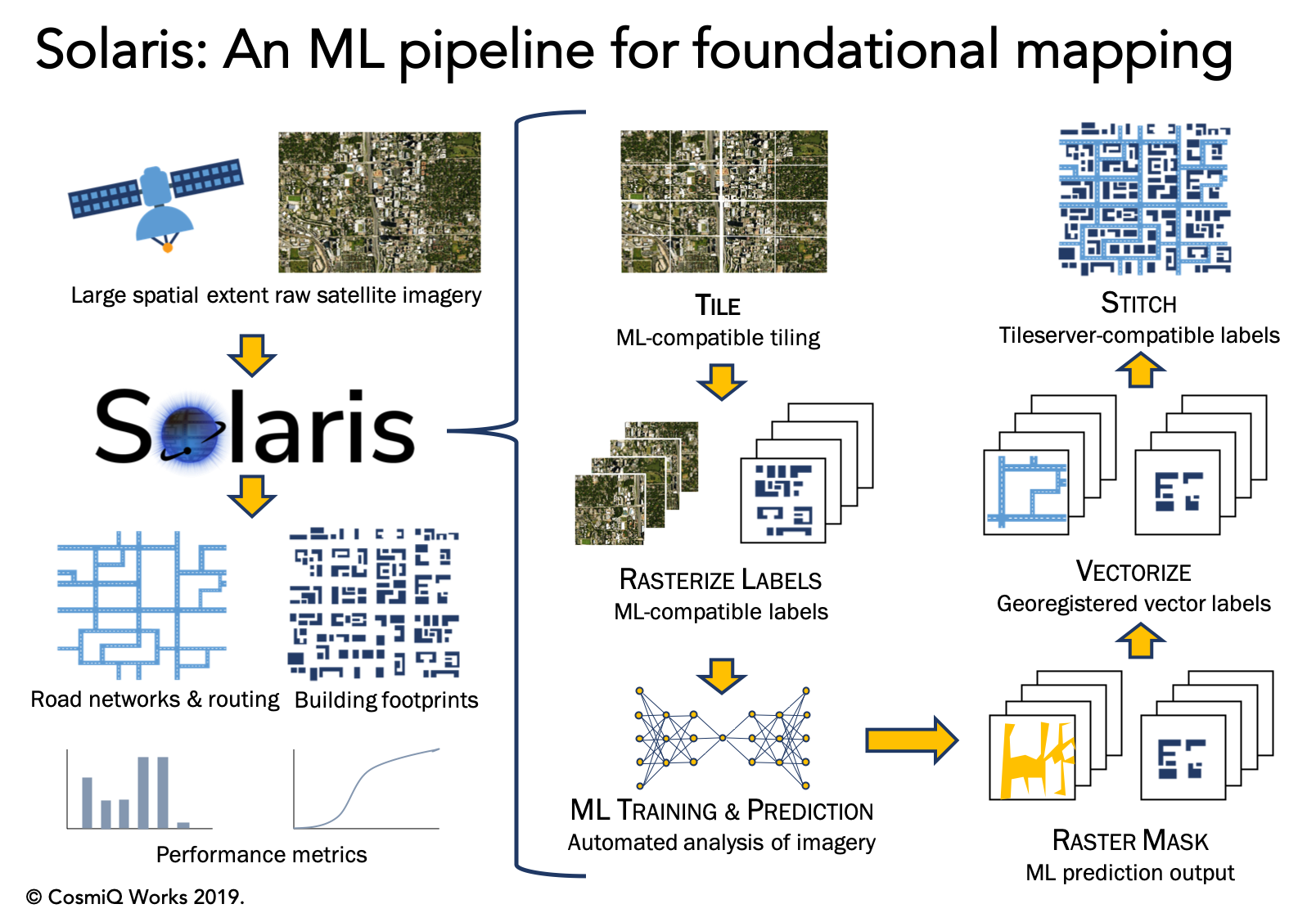

Quantifying population statistics is fundamental to 67 of the 232 United Nations Sustainable Development Goals, but the World Bank estimates that more than 100 countries currently lack effective Civil Registration systems. The SpaceNet 7 Multi-Temporal Urban Development Challenge aims to help address this deficit and develop novel computer vision methods for non-video time series data. In this challenge, participants will identify and track buildings in satellite imagery time series collected over rapidly urbanizing areas. The competition centers around a new open source dataset of Planet satellite imagery mosaics, which will include 24 images (one per month) covering ~100 unique geographies. The dataset will comprise 40,000 km2 of imagery and exhaustive polygon labels of building footprints in the imagery, totaling over 3M individual annotations. Challenge participants will be asked to track building construction over time, thereby directly assessing urbanization.

This Challenge has broad implications for disaster preparedness, the environment, infrastructure development, and epidemic prevention. Beyond the humanitarian applications, this competition poses a unique challenge from a computer vision standpoint because of the small pixel area of each object, the high object density within images, and the dramatic image-to-image difference compared to frame-to-frame variation in video object tracking. We believe this challenge will aid efforts to develop useful tools for overhead change detection.

SpaceNet 7 will be featured as a competition at the 2020 NeurIPS conference in December, where winning results will also be announced.