Satellite Imagery Multiscale Rapid Detection with Windowed Networks (SIMRDWN) Repository

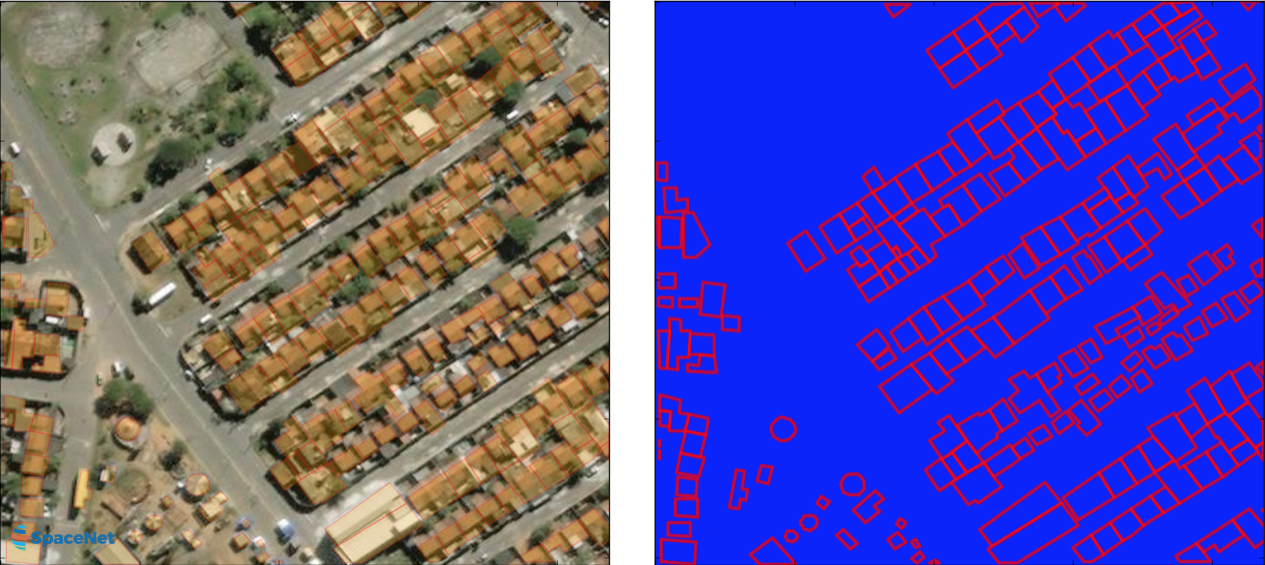

Rapid detection of small objects over large areas remains one of the principal drivers of interest in satellite imagery analytics. This project sought to build off our previous work with the You Only Look Twice (YOLT) algorithm, which modified YOLO to rapidly analyze images of arbitrary size, and improves performance on small, densely packed objects.

Since YOLO is just one of many advanced object detection frameworks, however, and algorithms such as SSD, Faster R-CNN, and R-FCN merit investigation against geospatial applications as well, CosmiQ developed the Satellite Imagery Multiscale Rapid Detection with Windowed Networks (SIMRDWN) framework. SIMRDWN (phonetically: [SIM-er] [doun]) combined the scalable code base of YOLT with the TensorFlow Object Detection API, allowing end users to select a vast array of architectures to apply towards bounding box detection of objects in overhead imagery.